Just recently, Apple gave confirmation on its previously reported plans to deploy a brand-new and innovative technology that will make its Apple products detect child sex abuse imagery. According to the US-based tech giant, this upcoming technology will be available for iOS, macOS, watchOS, and iMessage.

In addition, Apple also published a new Child Safety page on its official website, which ultimately provides some crucial details that clarify exactly how its ongoing project related to child safety will work.

In that new Child Safety Page, the company mentioned that for devices in the US, new versions of iOS and iPadOS would be released later this fall, which would come with “new applications of cryptography to help limit the spread of CSAM (Child Sexual Abuse Material) online, while designing for user privacy.”

To be a little more specific, Apple will be introducing these new Child Safety features in three areas. It’s also worth noting that all of these CSAM-prevention and CSAM-mitigation functionalities were developed in collaboration with child safety experts.

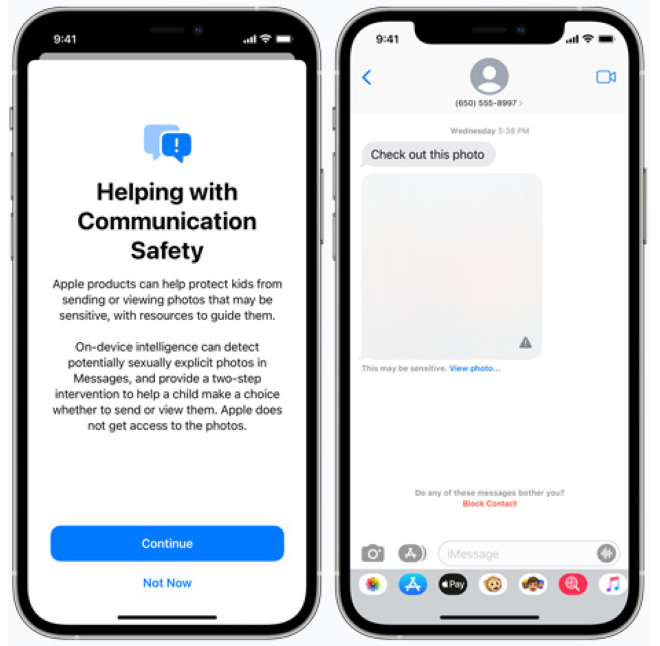

Messages App with new CSAM-prevention Warning Notifications

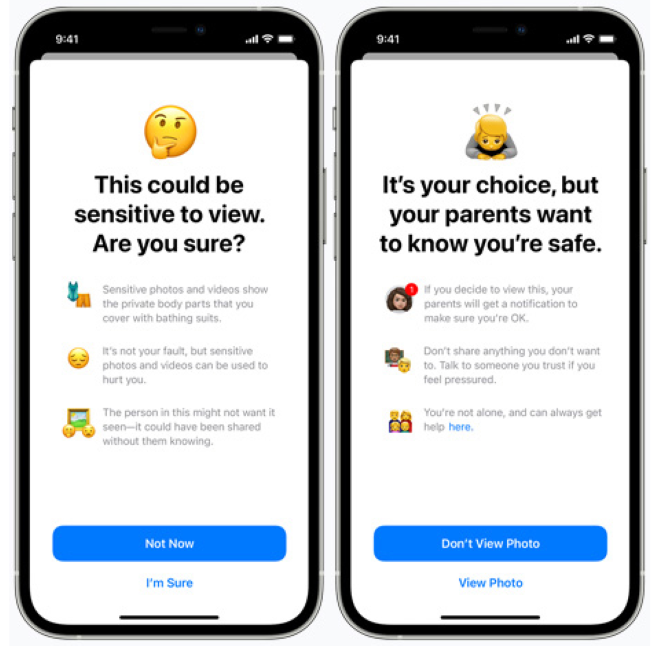

Firstly, the Messages App will soon include new notifications that will not only warn children, but also their parents about whenever they either send or receive sexually explicit photos.

That said, whenever someone sends a child an inappropriate image, the App will firstly blur that picture out, and then it will also display several Warning Messages, which, according to a screenshot that Apple shared, will come in a notification saying: “It’s not your fault, but sensitive photos and videos can be used to hurt you”.

Not only that, but as a secondary precaution, Apple also mentioned that its Messages App will also be capable of notifying parents if their child ever decides to ignore that first Warning Message and view any potentially received sensitive image. This was confirmed by Apple in it’s recent blog-post, where the company stated that “Similar protections are available if a child attempts to send sexually explicit photos”.

According to Apple, this new CSAM-prevention functionality works based on device machine learning, which ultimately makes the Messages App capable of determining whether or not a photo is explicit. On top of that, Apple does not have access to the messages themselves, as they’re all encrypted.

This feature will soon be available to family iCloud accounts.

iCloud CSAM-prevention Content Uploads

In addition to its new CSAM-prevention Warning Notifications for its Messages App, Apple will also be rolling out some new software tools within iOS and iPadOS that will ultimately make the company able to detect whenever a user uploads CSAM-related content to iCloud that shows children involved in sexually explicit acts.

According to Apple, this new technology will be used to notify the National Center for Missing and Exploited Children (NCMEC), which in turn will work together with law enforcement agencies all across the US.

The company also mentioned that this new iCloud CSAM-prevention technology for detecting known CSAM-related activities “is designed with user privacy in mind”. That because, instead of having the whole structure scanning for photos whenever they’re uploaded to the cloud, the system itself will use a on-device database of “known” images provided by NCMEC as well as by other organizations.

Apple also mentioned that this database works by simply assigning a hash-code to the photos, which will basically be used as a kind of digital fingerprint for each picture.

Now, what will allow Apple to determine if there’s a positive CSAM-related match without ever looking at the results of this picture-scanning process is a unique Cryptographic Technology that’s called Private Set Intersection. Whenever a positive CSAM-related match ends up occurring, an iPhone or iPad will create a cryptographic safety voucher that will encrypt the upload, along with additional data about it.

Additionally, there’s one other Technology called Threshold Secret Sharing that ultimately prevents the company from seeing any of the contents of those cryptographic safety vouchers, unless someone passes an unspecified threshold of CSAM content, that is. The company confirmed this by stating that “The threshold is set to provide an extremely high level of accuracy and ensures less than a one in one trillion chance per year of incorrectly flagging a given account”.

To be more specific, only and only when that line is actually passed will the technology that the company plans to implement finally allow Apple employees to check and review the contents of said cryptographic safety vouchers.

According to Apple, if that ever ends up happening, its employees that are responsible for dealing with this CSAM-related content will manually review each report to confirm if there’s indeed a positive CSAM-related match. In cases where a match is indeed confirmed, those same employees will then have the individual’s iCloud account disabled, and they’ll also forward a report to NEMEC. Still, if any false-match ever ends up occurring, users will still be able to appeal a suspension if they believe their account was mistakenly flagged.

Siri & Built-in Search Feature will get new Child Safety Assets

Last but not least, the third area where Apple will integrate these new Child Safety features will be directly related with its own digital assistant. That said both Siri as well as the built-in search feature found in iOS and macOS will be able to point users to child safety resources. For example, users will be able to ask Siri exactly how they can report child exploitation.

Best of all, Apple also plans to update Siri to intervene whenever a user tries to conduct any CSAM-related searches. If that ever happens, Siri will simply state that “interest in this topic is harmful and problematic”, and the company’s digital assistant will also guide the user in question to resources that offer help with the issue.

FINAL THOUGHTS

First and foremost, it’s always good to see a tech-giant like Apple trying to implement family-friendly and user-safety functionalities that are more focused on the younger generations.

In addition to that, Apple’s effort to work together with law enforcement agencies is something that’s nice to finally see taking place. That’s because, not so long ago, just back in 2016, Apple had refused to help the FBI unlock the iPhone that belonged to the man behind the San Bernardino terror attack.

Ultimately, if Apple indeed starts working side-by-side with law enforcement agencies to ensure its users safety, then we can probably say that the company will only get even more support and most likely draw in even more potential fans and customers.

Also Read: Be Happy by adding these 15 essential Ingredients into your life

Also Read: 5 Personalized Gift Picks Perfect for Your Valentine